The University of Colorado Boulder's VisuaLab is an interdisciplinary research laboratory headed by Dr. Danielle Albers Szafir. Situated within the ATLAS Institute and Department of Computer Science, the VisuaLab explores the intersection of data science, visual cognition, and computer graphics. Our goal is to understand how people make sense of visual information to create better interfaces for exploring and understanding information. We work with scholars from psychology to biology to the humanities to design and implement visualization systems that help drive innovation. Our ultimate mission is to facilitate the dialog between people and technologies that leads to discovery.

Our research centers on four major thrusts:

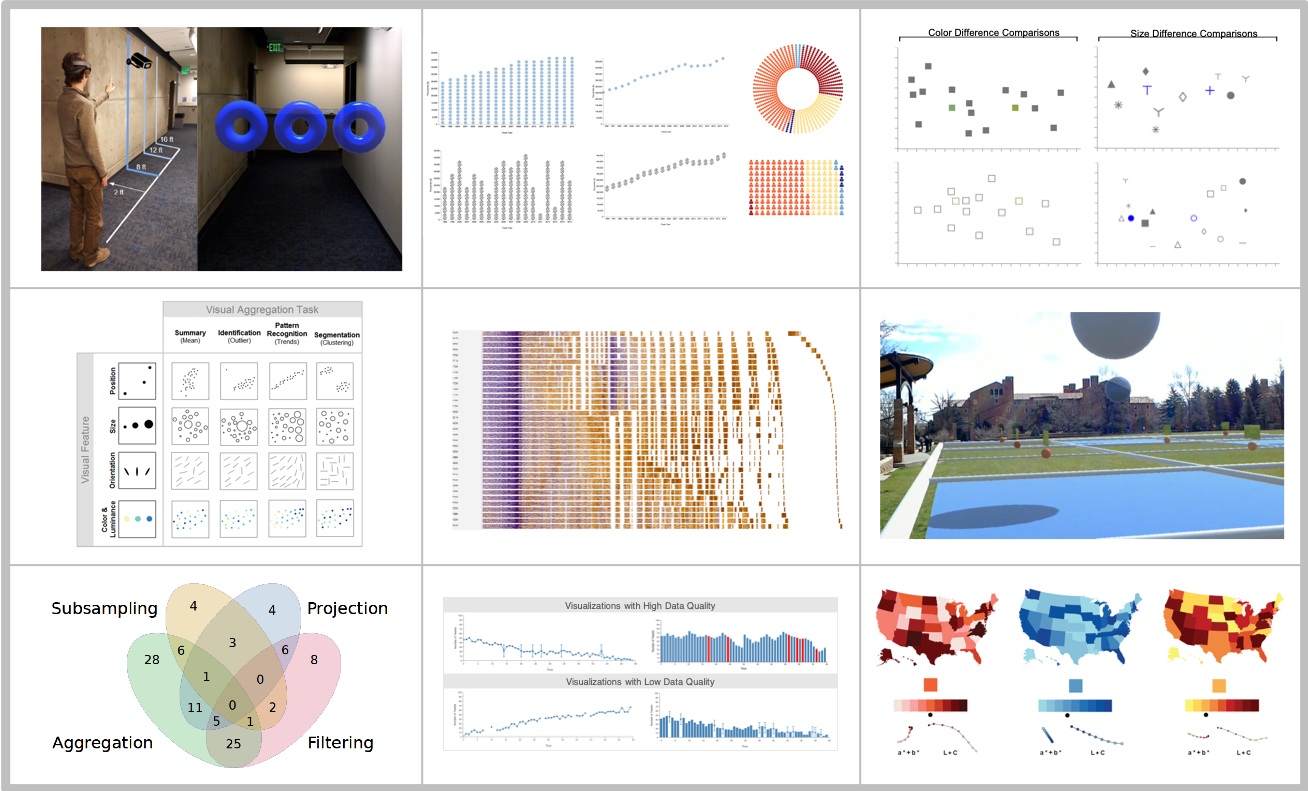

Cognition & Visualization: What do people see when they look at a visualization? How does that information translate to insight and knowledge? We conduct empirical studies to model the perceptual and cognitive processes involved in data interpretation to create more scalable and effective visualizations.

Building Better Visualizations: How can visualizations best help people make sense of data? How can we facilitate more effective visualization design? We use insight from empirical studies and user-centered design to create novel systems for designing visualization tools and for novel insight into data across a broad variety of domains.

Systems for Human-Machine Collaboration: How might people and machine learning algorithms work collaboratively to explore data? How might systems provide a dynamic bridge between ML's scalability and human expertise? We study how visualization can facilitate transparent insight into machine learning and build systems to leverage these techniques to support collaborative analysis and decision making.

Novel Interfaces for Data: How might designers best leverage new interface technologies to explore data? What new insights and opportunities do these technologies afford? We design novel visualization systems for mobile and mixed reality devices to integrate data and decision making into the real world.

See our Projects page for details and links to past and on-going projects.

We are always open to new researchers and new collaborations. Join a diverse cohort of students as part of an interdisciplinary community ranked in the top ten for HCI research. For more information about the available projects, see our listing of open positions or contact Dr. Szafir directly.

News from the Lab:

12.2020: Szafir, Samsel, Zeller, & Saltus' "Enabling Crosscutting Visualization for Geoscience" was accepted to CG&A.

12.2020: Szafir & Szafir's "Connecting Human-Robot Interaction and Data Visualization" was conditionally accepted to HRI 2021.

12.2020: Payne (NYU), Bergner (NYU), West, Charp, Shapiro, Szafir, Taylor, & Desportes' (NYU) "danceON: Culturally Responsive Creative Computing for Data Literacy" was conditionally accepted to CHI 2021.

12.2020: Wu, Petersen, Ahmad, Burlinson, Tanis, & Szafir's "Understanding Data Accessibility for People with Intellectual and Developmental Disabilities" was conditionally accepted to CHI 2021.

12.2020: "Grand Challenges in Immersive Analytics", work from a large joint collaboration including Whitlock & Szafir, was conditionally accepted to CHI 2021.

11.2020: Bae & West's CyborgCrafts accepted to the TEI Student Design Competition. Congrats, Mary & Sandra!

10.2020: Muesing, Ahmed, Burks, Iuzzolino, & Szafir's "Fully Bayesian Human-Machine Data Fusion for Robust Online Dynamic Target Characterization" was accepted to JAIS.

08.2020: Work by Elliott (UBC), Xiong (Northwestern/UMass), Nothelfer (Nielson), & Szafir award a Best Paper Honorable Mention at IEEE VIS 2020.

08.2020: Do, Bae, Gyory, & Szafir received an NSF EAGER for work in using physicalization in informal education.

07.2020: Whitlock, Szafir, & Gruchalla's (NREL) "HydrogenAR: Interactive Data-Driven Storytelling for Dispenser Reliability" accepted to ISMAR 2020.

05.2020: Work by Smart, Wu, and Szafir featured in American Geophysical Union's Eos Science News.