The CU VisuaLab provides an opportunity for researchers to come together to tackle innovative questions about visualization, data analytics, and computer graphics driven by real-world challenges. Below is a sample of on-going projects in the VisuaLab. For more information about these projects or others, please contact Dr. Szafir.

|

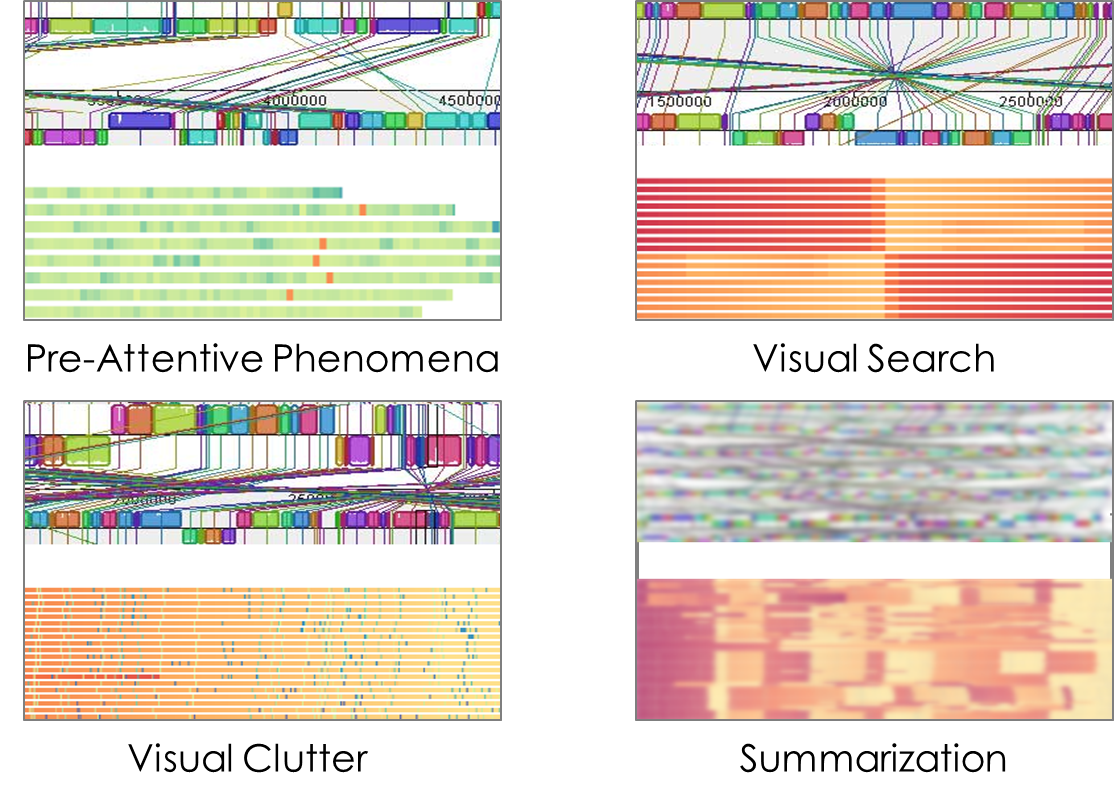

Our understanding of visualization design is conventionally based on how well people can compare pairs of points. As people face more and more data, visualization must move beyond small-scale design thinking to understand how design might support people in understanding large collections of datapoints. Drawing from psychology, this work seeks to understand how people estimate properties across collections of points in a visualization (a process known as visual aggregation) through experimentation, and how visualizations might be designed to support these judgments. The results from these efforts have driven scalable systems in domains ranging from biology to the humanities. VisuaLab Personnel: Stephen Smart, David Burlinson Example Publications: |

|

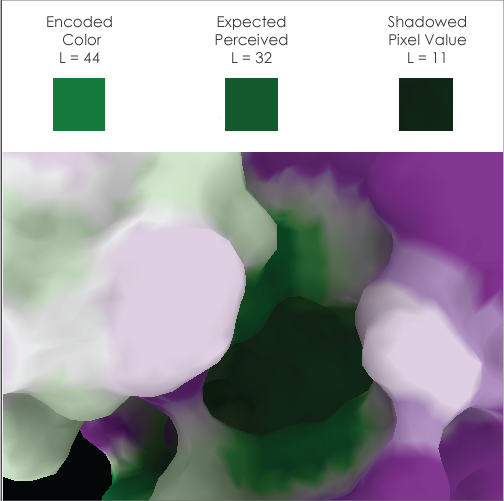

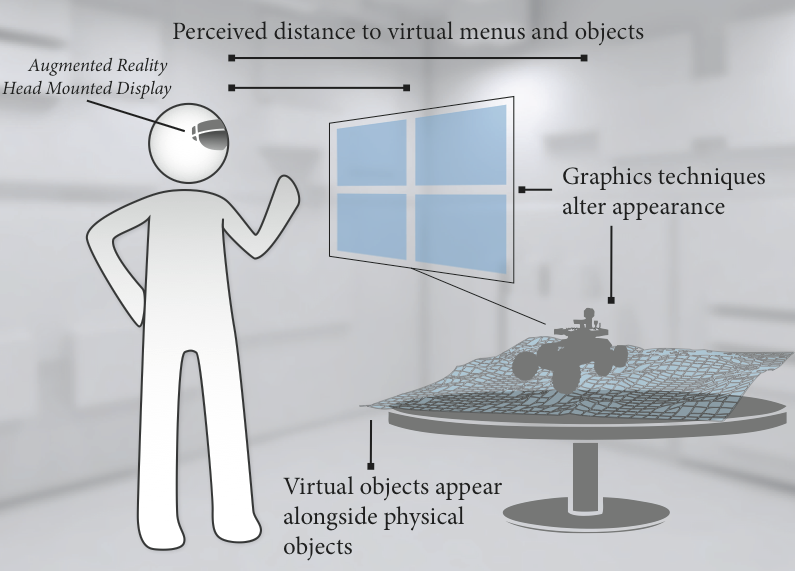

The space of consumer display technologies is evolving rapidly. This provides people with access to displays of different shapes, sizes, and capabilities, such as mobile phones, HMDs, and smartwatches. New displays afford new opportunities for analytics tools that help people make sense of our increasingly data-driven world. This project looks at how people perceive and interact with visual information with different display technologies. We develop guidelines, techniques, and tools that effectively leverage the capabilities of these technologies to enhance the ubiquity, accessibility, and effectiveness of data analytics and immersive visual applications. VisuaLab Personnel: Matt Whitlock, Keke Wu Example Publications: |